English summary: This is a holiday special for my German C++ beginners book, hence this post is in German.

Dieser Blogpost erweitert Ihr Wissen aus dem Buch C++ Schnelleinstieg: Programmieren lernen in 14 Tagen. Nach dem Kapitel 9 (Fortgeschrittene Konzepte) haben Sie alle nötigen Grundlagen, die Sie als Voraussetzung für das Special benötigen:

- Grundlagen der Objektorientierung

- Bibliotheken mittels vcpkg einbinden

- GUI-Programmierung mit Nana

- Callbacks und Lambdas

- Fehler werfen

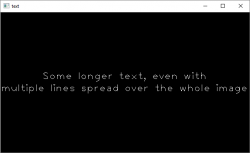

In diesem Projekt soll ein Weihnachtsbaum programmiert werden, bei dem Sie die Kerzen per Mausklick anzünden können. So sieht das ganze aus, wenn es fertig ist:

Das Codebeispiel beginnt in der main-Funktion des Programms und fügt nach und nach zusätzliche Komponenten oberhalb ein – daher beginnt die Zeilennummerierung so wie sie in der fertigen Datei sein wird (Downloadlink am Ende des Beitrags). Der Code wird durch Erklärungen unterbrochen, wie Sie an den fortlaufenden Zeilennummern erkennen können.

int main()

{

nana::paint::image tree("tree.png");

if (tree.empty())

{

/* Sie müssen die drei Bilder (tree.png, candleOn.png, candleOff.png) in den

Build-Ordner kopieren. Sie finden diesen, indem Sie in Visual Studio im

Projektmappen-Explorer einen Rechtsklick auf das Projekt durchführen und

"Ordner in Datei-Explorer öffnen" auswählen.

*/

throwException("Konnte das Baumbild nicht laden!");

}

Die Funktion throwException wird später noch implementiert. Die für das Programm benötigten drei Bilder des Baums, der erloschenen und der angezündeten Kerze finden Sie zusammen mit der fertigen Codedatei am Ende dieses Beitrags. Nun geht es um die grundlegende Struktur: Das Fenster sowie die Kerzen.

// Ein Nana-Formular als Basis des Fensters anlegen

nana::form window(nana::API::make_center(tree.size().width, tree.size().height));

window.caption("C++ Weihnachtsspecial 2021");

// Jede Codezeile beim Anlegen der Liste entspricht einem Stockwerk des Baumes

std::vector<Candle> candles = { Candle(290, 200),

Candle(200, 300), Candle(300, 330), Candle(400, 315),

Candle(120, 430), Candle(250, 450), Candle(350, 450), Candle(480, 450),

Candle(70, 580), Candle(170, 620), Candle(290, 630), Candle(420, 625), Candle(540, 600) };

Die Kerzen werden als Objekte modelliert und mit x- und y-Koordinaten angelegt. Die Klassendefinition folgt gleich, nachdem der Rest der main-Funktion besprochen wurde. Folgen Sie solange dem im Abschnitt 4.3 des Buches erläuterten Prinzip des “Wishful Programmings” und nehmen Sie einfach an, Sie hätten schon eine solche Klasse, die Ihnen alle Wünsche erfüllt. Nun sollen die Grafiken alle gezeichnet werden:

// Zeichnen des Baumes und der Kerzen

nana::drawing drawing(window);

drawing.draw([&](nana::paint::graphics& graphics)

{

tree.paste(graphics, nana::point(0, 0));

for (Candle& candle : candles)

{

candle.draw(graphics);

}

graphics.string(nana::point(10, static_cast<int>(window.size().height) - 20),

"C++ Schnelleinstieg: Programmieren lernen in 14 Tagen. Philipp Hasper, 2021");

});

Das Zeichnen der weihnachtlichen Szenerie übernimmt die Nana-Klasse nana::drawing innerhalb einer Lambdafunktion. Sie sehen hier zwei praktische Methoden in Aktion:

tree.paste() kopiert das Bild des Baumes an eine bestimmte Stelle – hier an die Stelle (0,0) was der linken oberen Ecke entspricht. Hierfür muss auch noch das graphics-Object übergeben werden, welches aus dem Parameter der Lambdafunktion kommt.graphics.string() schreibt einen Text an eine bestimmte Stelle. Hier soll es in die linke untere Ecke, daher muss für die y-Koordinate von der Höhe des Fensters genug Platz abgezogen werden, sodass der Text noch hinpasst.

Zum Schluss fehlen noch die Klickereignisse. Da die Kerzen als Grafiken gezeichnet wurden, haben sie kein automatisches Klick-Event wie zum Beispiel eine Schaltfläche. Daher muss jeder Klick ins Fenster abgefangen und darauf überprüft werden, ob seine Koordinaten innerhalb einer Kerze liegen:

// Jeden Klick überprüfen, ob er eine Kerze trifft

window.events().click([&](const nana::arg_click& event) {

if (event.mouse_args == nullptr)

{

// Event kann nicht verarbeitet werden

return;

}

for (Candle& candle : candles)

{

if (candle.isClicked(event.mouse_args->pos))

{

candle.toggle();

/* Hier könnte man mit einem return die Schleife verlassen.

Da sich aber bei falscher Platzierung die Kerzen überlagern

könnten, wird die Schleife noch zu Ende geführt.

*/

}

}

// Zeichnung wiederholen, sodass das Bild aktualisiert wird.

drawing.update();

});

// Fenster anzeigen und Nana starten

window.show();

nana::exec();

return 0;

}

Ob eine Kerze geklickt wurde, wird innerhalb der Candle-Klasse überprüft. Auch die Reaktion auf einen Klick ist in dieser Klasse implementiert. Fügen Sie daher diese Klasse oberhalb der main-Funktion ein:

// Klasse zum Zeichnen einer Kerze in zwei Zuständen (angezündet, ausgeblasen)

class Candle {

private:

nana::paint::image candleOn;

nana::paint::image candleOff;

bool isOn = false;

nana::rectangle rectangle;

public:

Candle(int centerX, int centerY)

: candleOn("candleOn.png"),

candleOff("candleOff.png")

{

if (candleOn.empty() || candleOff.empty())

{

throwException("Konnte die Kerzenbilder nicht laden!");

}

if (candleOn.size() != candleOff.size())

{

throwException("Kerzenbilder haben nicht die gleiche Größe!");

}

/* Die an den Konstruktor übergebene x - und y - Koordinate sollen das Zentrum

der Kerze angeben, daher müssen Sie für das Ziel-Rechteck umgerechnet werden.

*/

nana::size size = candleOn.size();

rectangle = nana::rectangle(centerX - size.width/2,

centerY - size.height/2,

size.width,

size.height);

}

void draw(nana::paint::graphics& graphics)

{

if (isOn)

{

candleOn.paste(graphics, rectangle.position());

}

else

{

candleOff.paste(graphics, rectangle.position());

}

}

bool isClicked(const nana::point& point)

{

// Diese Methode testet, ob der Punkt innerhalb des Rechtecks liegt

return rectangle.is_hit(point);

}

void toggle()

{

isOn = !isOn;

}

};

Diese Klasse handhabt zwei verschiedene Bilder, die an einem Punkt zentriert angezeigt werden sollen. Abhängig vom Zustand (isOn), wird das eine oder das andere Bild gezeichnet. Zur einfacheren Platzierung wird beim Erzeugen des Objektes das gewünschte Zentrum der Kerze angegeben, was dann innerhalb des Konstruktors in ein entsprechendes Rechteck umgerechnet werden muss.

Nun fehlt noch eine Hilfsfunktion, die eine Fehlermeldung auf der Konsole ausgibt und dann einen Fehler wirft. Fügen Sie diese am Anfang der Datei, nach den include-Befehlen ein:

// Funktion, einen Fehler auf der Konsole ausgibt und dann einen Fehler wirft

void throwException(const std::string& msg)

{

std::cout << msg << std::endl;

throw std::exception(msg.c_str());

}

Und das war auch schon das ganze Programm. Frohe Feiertage und einen guten Rutsch! Den vollständigen Code und die Bilder können Sie hier herunterladen: